CoreDNS(kube-dns) resolution truncation issue on Kubernetes (Amazon EKS)

This article is describing the thing you need to aware for DNS resolution issue can occur on Kubernetes. Especially when your Pod is relying on CoreDNS(kube-dns) to resolve DNS record when connecting to Amazon ElastiCache or target with large response payload, the issue potentially can happen.

Note: To help you understand the detail, I was using Amazon EKS with Kubernetes version 1.5 and CoreDNS (v1.6.6-eksbuild.1) as example.

What’s the problem

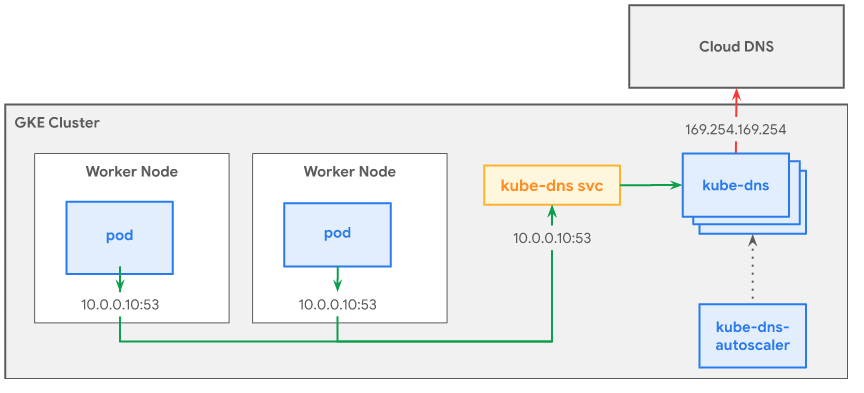

In Kubernetes, it provides an extra layer of the DNS resolution so containerized applications running on Kubernetes cluster basically heavily relying on using own DNS service to provide extra beneficial of service discovery by default, which is kube-dns add-ons.

And most common use cases are usually using CoreDNS as the default DNS resolution provider by deploying and running CoreDNS Pods within the cluster.

Containerized applications running as Pod can use autogenerated service name that map to Kubernetes service’s IP(Cluster-IP), such as my-svc.default.cluster.local. It provides more flexibility to Pods to do internal service discovery as they can use the hostname to resolve the record without remembering the private IP address within the application when deploying to other environment. The kube-dns add-ons usually have ability to resolve external DNS record so Pods can also access service on Internet.

Symptom

Basically, everything runs good for general use case and you propely won’t see any issue when running your production workload. But if your application need to resolve some DNS record with large DNS response payload(like sometimes your endpoint of ElastiCache response larger payload), you might notice the issue happen and your application never correctly resolve them.

You can use some simple method by installing dig in your Pod to test and debug if you can see the difference from the result:

# Get it a try to see if the external DNS provider

#

# Please make sure your Pod should have accessibility to reach out to Internet

# Otherwise you have to change 8.8.8.8 as your own private DNS server that Pod can reach out

$ dig example.com @8.8.8.8

# Get it a try to see if CoreDNS can correctly resolve the record

$ dig example.com @<YOUR_COREDNS_SERVICE_IP>

$ dig example.com @<YOUR_COREDNS_PRIVATE_IP>

# Get it a try to see other record is having this issue or not

$ dig helloworld.com @<YOUR_COREDNS_PRIVATE_IP>

For example if I have running CoreDNS Pods and kube-dns service in my Kubernetes cluster

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system pod/coredns-9b6bd4456-97l97 1/1 Running 0 5d3h 192.168.19.7 XXXXXXXXXXXXXXX.compute.internal <none> <none>

kube-system pod/coredns-9b6bd4456-btqpz 1/1 Running 0 5d3h 192.168.1.115 XXXXXXXXXXXXXXX.compute.internal <none> <none>

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kube-system service/kube-dns ClusterIP 10.100.0.10 <none> 53/UDP,53/TCP 5d3h k8s-app=kube-dns

I can use commands like below to check which point can cause failure:

dig example.com @10.100.0.10

dig example.com @192.168.19.7

dig example.com @192.168.1.115

dig helloworld.com @10.100.0.10

dig helloworld.com @192.168.19.7

dig helloworld.com @192.168.1.115

If you are getting result which is both are getting failed but other DNS record is working even using CoreDNS as name server. As a DNS resolver(CoreDNS), we can identify something went wrong when CoreDNS is trying to help you forward the DNS quries for some specific target, and this is the issue we would like to discuss. However, If the Private IP of CoreDNS is working but Service IP (Cluster IP) is getting failure, or none of one are successful, you should pilot your investigation target on checking the Kubernetes networking encapsulation, such as: CNI plugin, kube-proxy, cloud provider’s setting or else that can break your Pod-Pod communication or host networking translation.

How to reproduce

In my testing, I was running Kubernetes cluster with Amazon EKS (1.5) with default deployments (such as CoreDNS, AWS CNI Plugin and kube-proxy). The issue can be reproduced when following steps with commands below:

$ kubectl create svc externalname quote-redis-cluster --external-name <MY_DOMAIN>

$ kubectl create deployment nginx --image=nginx

$ kubectl exec <nginx>

$ apt-get update && apt-get install dnsutils && nslookup quote-redis-cluster

My deployment with one nginx Pod and two CoreDNS Pods already running within my EKS cluster:

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

default pod/nginx-554b9c67f9-w9bb4 1/1 Running 0 40s 192.168.70.172 XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX.compute.internal <none> <none>

...

kube-system pod/coredns-9b6bd4456-6q9b5 1/1 Running 0 3d3h 192.168.39.186 XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX.compute.internal <none> <none>

kube-system pod/coredns-9b6bd4456-8qgs8 1/1 Running 0 3d3h 192.168.69.216 XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX.compute.internal <none> <none>

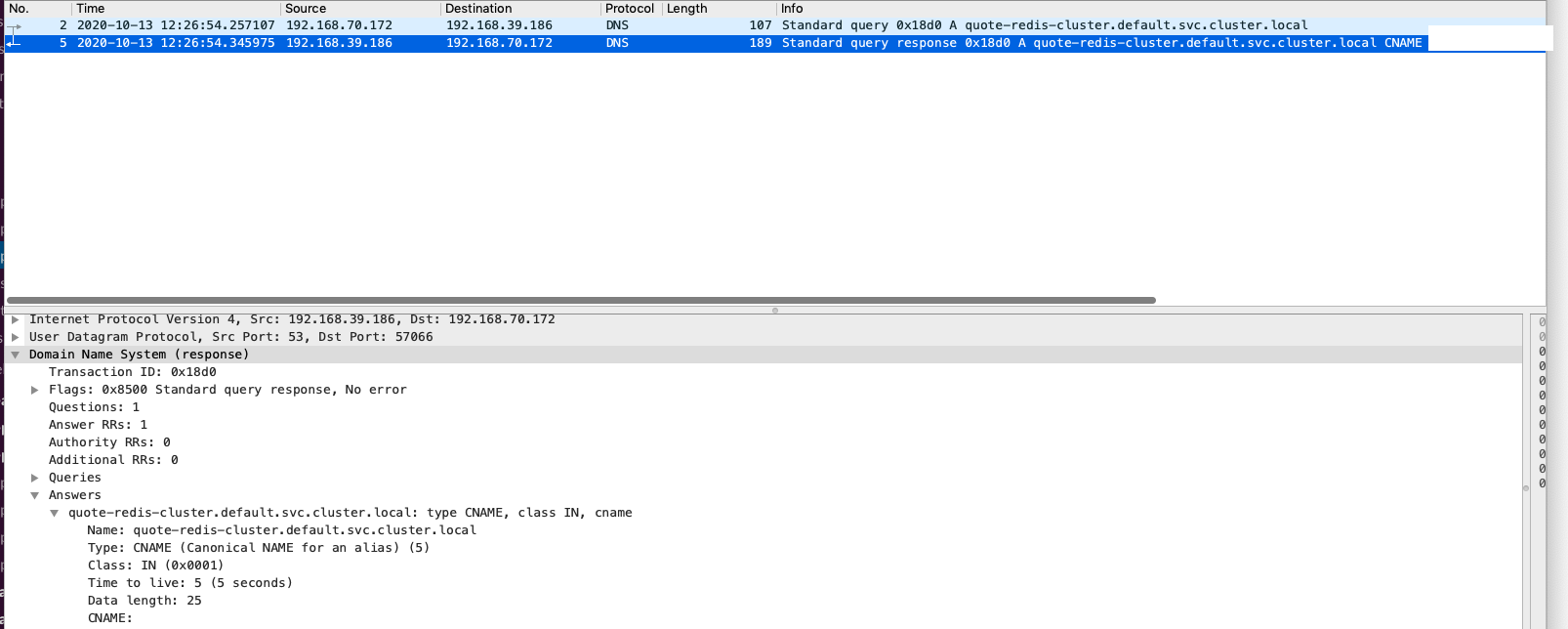

And here is the problem: when using nslookup to resolve the domain (set the DNS resolver as CoreDNS Pod 192.168.39.186 instead of using Cluster IP of kube-dns, this can exclude any issue can caused by Kubernetes networking based on iptables).

The test result only return the canonical name (CNAME), without IP address (example.com):

$ kubectl exec -it nginx-554b9c67f9-w9bb4 bash

root@nginx-554b9c67f9-w9bb4:/# nslookup quote-redis-cluster 192.168.39.186

Server: 192.168.39.186

Address: 192.168.39.186#53

quote-redis-cluster.default.svc.cluster.local canonical name = example.com.

Note: The domain name example.com basically has normal A record with many IP address. (The real case is that the example.com was an Amazon ElastiCache endpoint, with returning IP addresses of ElastiCache nodes)

However, when testing other domain, basically can successfully return the IP address even using nslookup, in the same container, with same DNS resolver (192.168.39.186):

$ kubectl create svc externalname quote-redis-cluster --external-name success-domain.com

$ kubectl exec -it nginx-554b9c67f9-w9bb4 bash

root@nginx-554b9c67f9-w9bb4:/# nslookup my-endpoint 192.168.39.186

Server: 192.168.39.186

Address: 192.168.39.186#53

my-endpoint.default.svc.cluster.local canonical name = success-domain.com.

Name: success-domain.com.

Address: 11.11.11.11

Name: success-domain.com.

Address: 22.22.22.22

And this is the issue I would like to talk about. Let’s break down and understand why having difference in the result.

Deep dive into the root cause

Let’s start to break down what happen inside. To better help you understand what’s going on, it is required to know:

192.168.70.172(Client - nslookup): The nginx Pod, in this Pod I was runningnslookupto test the DNS resolution ability.192.168.39.186(CoreDNS): The real private IP of CoreDNS Pod, play as DNS resolver forkube-dnsservice.192.168.69.216(CoreDNS): The real private IP of CoreDNS Pod, play as DNS resolver forkube-dnsservice.192.168.0.2(AmazonProvidedDNS): The default DNS resolver in VPC and can be used by EC2 instances.

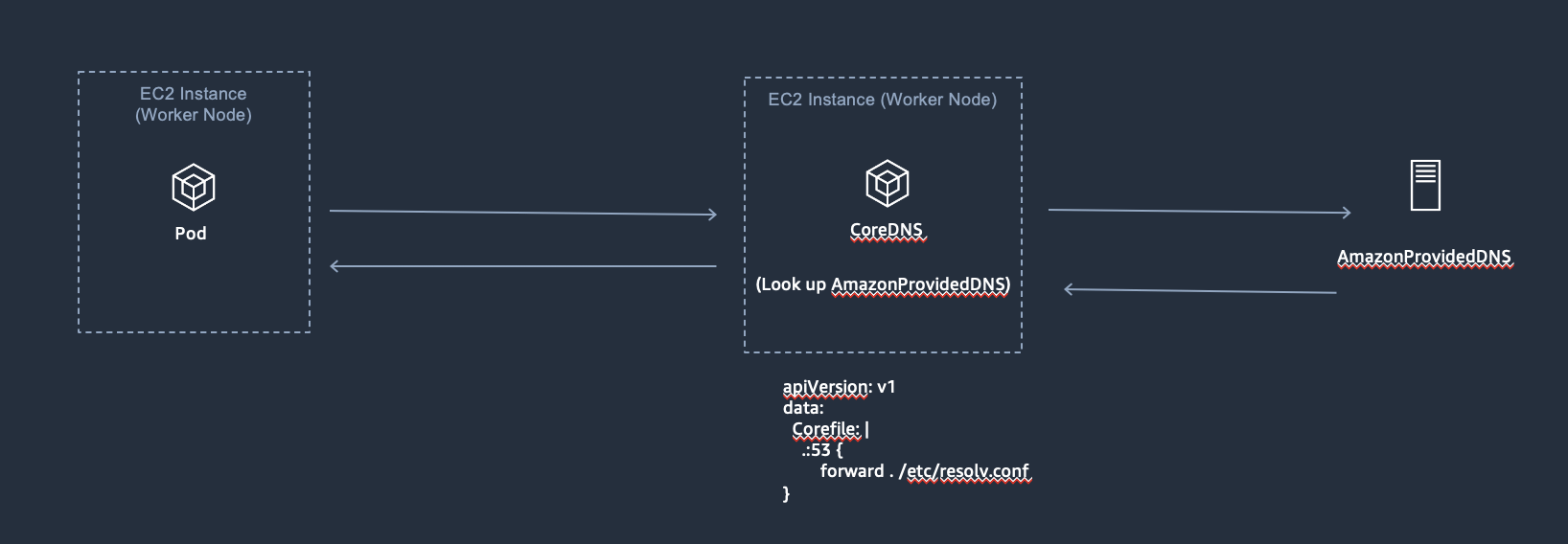

On EKS, the DNS resolution flow can be looked like:

First, the Pod will issue a DNS query to CoreDNS. Once the CoreDNS receives the query, it will check if have any cache DNS record exists, otherwise, it would follow the setting mentioning in the configuration to forward the reqeust to upstream DNS resolver.

The following is that CoreDNS will look up the DNS resolver according to the configuration /etc/resolv.conf of CoreDNS node(In my environment it was using AmazonProvidedDNS):

apiVersion: v1

data:

Corefile: |

.:53 {

forward . /etc/resolv.conf

...

}

Based on the model, responses will follow the flow and send back to the client. The normal case(happy case) is that we always can query DNS A records and can correctly have addresses in every response:

Right now, let’s move on taking a look what’s going on regarding the issue:

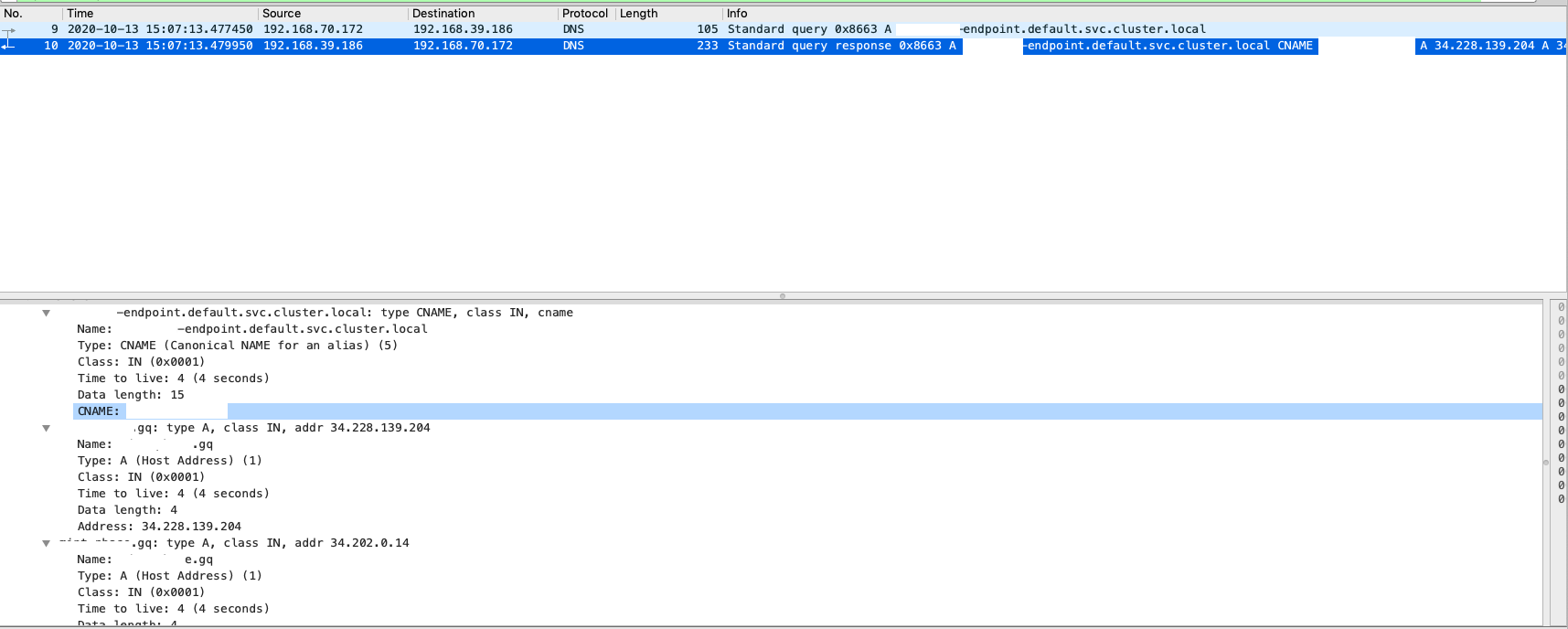

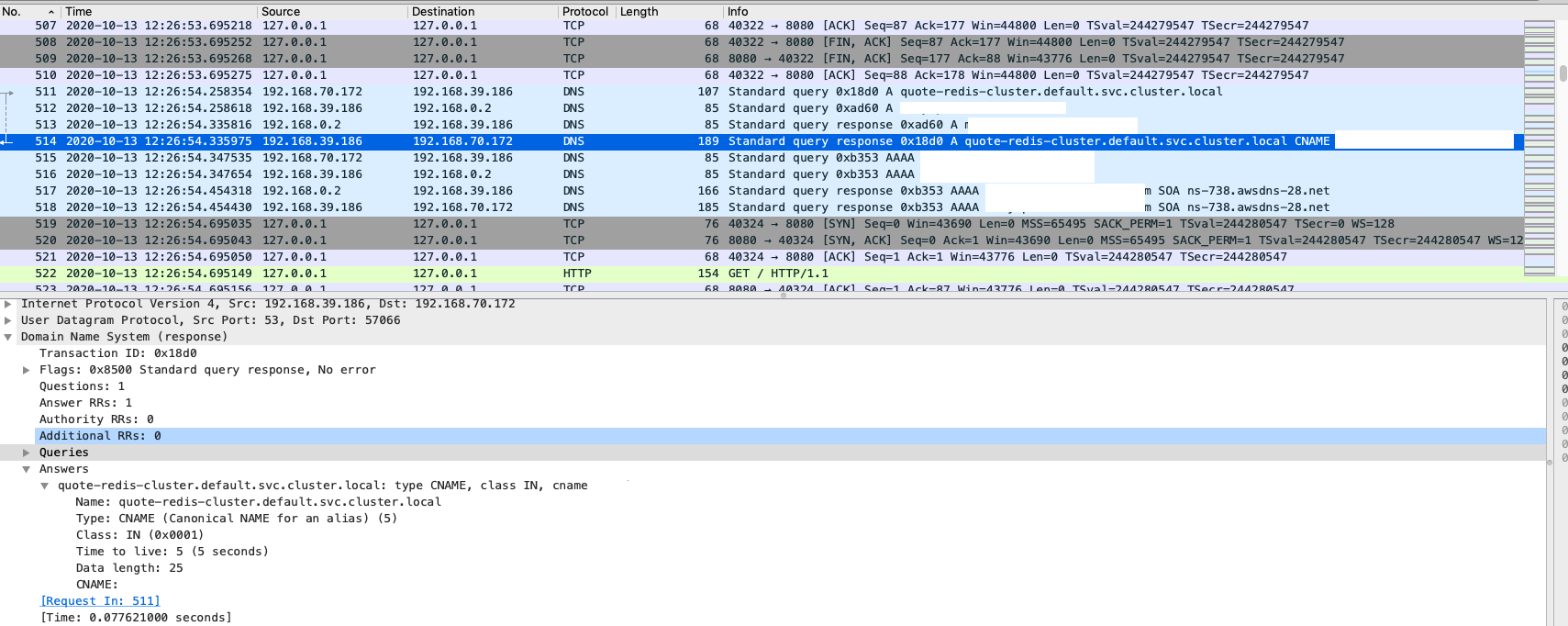

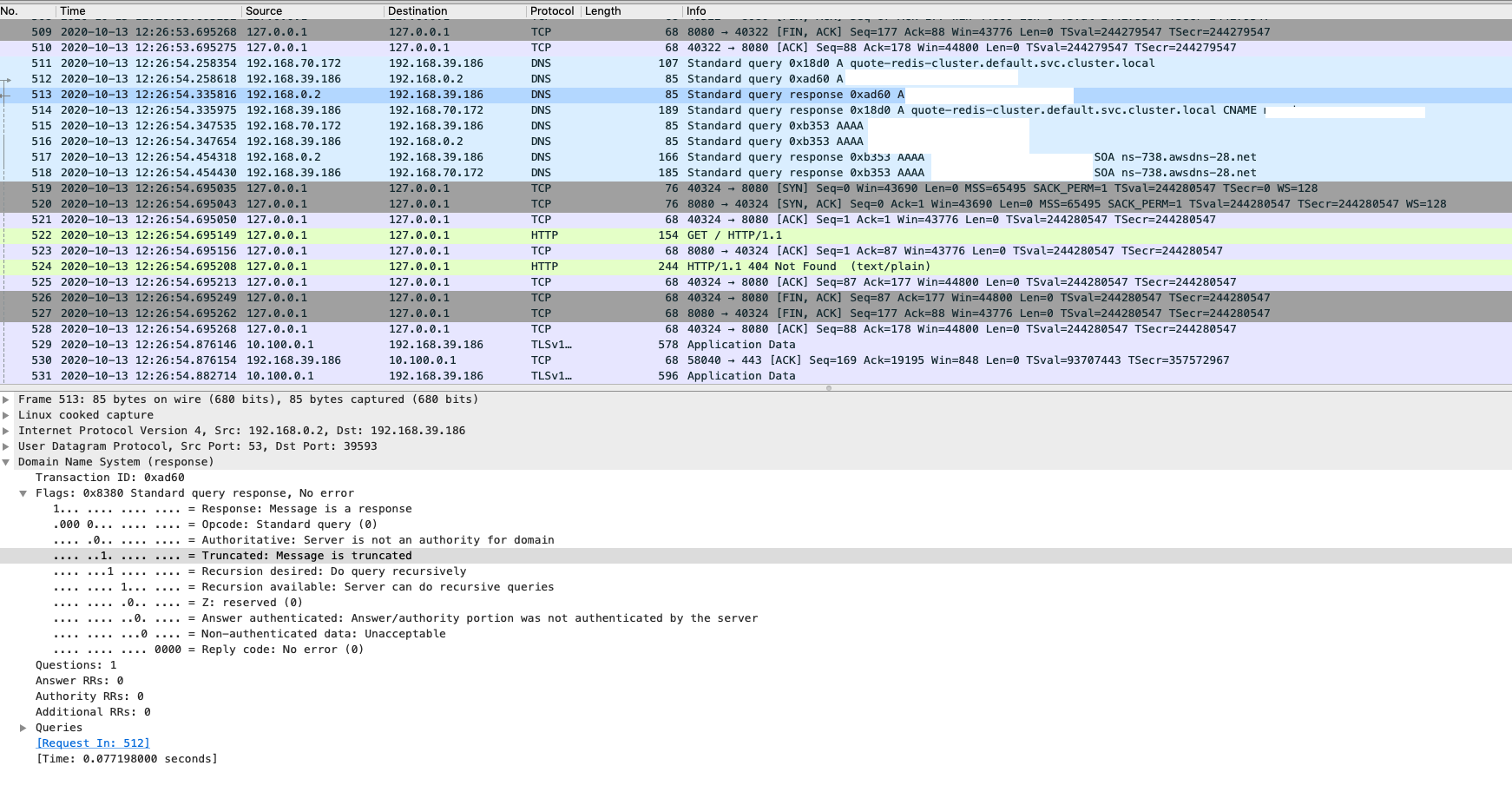

1) On the client side, I was collecting packet and only can see the response with CNAME record, as we expected on nslookup output:

2) On the CoreDNS node, I also collected the packet and can see:

- The CoreDNS Pod was asking the record with AmazonProvidedDNS

- In the response, it only have CNAME record

192.168.70.172 -> 192.168.39.186 (Client query A quote-redis-cluster.default.svc.cluster.local to CoreDNS)

192.168.39.186 -> 192.168.0.2 (CoreDNS query A quote-redis-cluster.default.svc.cluster.local to AmazonProvidedDNS)

192.168.0.2 -> 192.168.39.186 (AmazonProvidedDNS response the record, with CNAME)

192.168.39.186 -> 192.168.70.172 (CoreDNS Pod response the CNAME record)

And if look the packet closer collected on CoreDNS node, here is they key point: The truncated flag (TC flag) was true, which means the DNS response is truncated.

At this stage, the issue can be identified the DNS response was truncated.

Why the DNS response (message) was truncated?

When you see the message truncation, you probably will say: “Holy … sh*t, DNS response is missing, upstream DNS resolver must eat the payload and did not working properly! “. However, the truth is, this is expected behavior according to the design of DNS when it initially comes to first 19s.

DNS primarily uses the User Datagram Protocol (UDP) on port number 53 to serve requests. Queries generally consist of a single UDP request from the client followed by a single UDP reply from the server. — wikipeida

The basic payload can be allowed in DNS query generally won’t 512 bytes. It basically can perfectly work in first 19s, however, lately 20s, with the scale of Internet was growing, people start to aware the original design unable to fully satisfy the requirement and they would like to include more information in DNS query(like the usage of IPv4). According to RFC#1123, it initiated the standard in case if a single DNS payload contain over 512 bytes limit for UDP:

It is also clear that some new DNS record types defined in the future will contain information exceeding the 512 byte limit that applies to UDP, and hence will require TCP. Thus, resolvers and name servers should implement TCP services as a backup to UDP today, with the knowledge that they will require the TCP service in the future.

Therefore, in RFC#5596 basically mentioning the behavior of “DNS Transport over TCP”. Solutions can be: use EDNS or retransmit the DNS query over TCP if truncation flag has been set (TC Flag):

In the absence of EDNS0 (Extension Mechanisms for DNS 0), the normal behaviour of any DNS server needing to send a UDP response that would exceed the 512-byte limit is for the server to truncate the response so that it fits within that limit and then set the TC flag in the response header.

When the client receives such a response, it takes the TC flag as an indication that it should retry over TCP instead.

Therefore, when the length of the answer exceeds 512 bytes(The upstream server should set TC flag to tell the downstream the message is truncated), when both client and server support EDNS, larger UDP packets are used including in additional UDP packet. Otherwise, the query is sent again using the Transmission Control Protocol (TCP). TCP is also used for tasks such as zone transfers. Some resolver implementations use TCP for all queries.

How to remedy the issue?

The symptom generally happen when Pods was trying to resolve the DNS record in UDP through CoreDNS. If the domain name contains payload exceeds 512 bytes, it can hit the default limit of UDP DNS query. When the payload over 512 bytes, it is expected to get the response with truncation has been set (TC flag).

However, when receiving payload with TC flag set up and can think of the response is truncated, by default(on Amazon EKS), CoreDNS doesn’t apply the retransmit behavior by using TCP, instead, CoreDNS only response the truncated message and sent it back to the client. This workflow cause the client can aware the DNS query message was missing.

Therefore, to remedy the issue, here are possible solutions can be adopted:

Solution 1: Using EDNS0

In common use case, the default 512 bytes generally can satisfy most usage because we will not expect that our DNS shouldn’t contain too much IP addresses information. However, in some cases, such as:

- To balance my workload, I have the resolution will response many targets, because I am using DNS to do round-robin.

- It is an endpoint of ElastiCache with many nodes.

- Other use case can include long message in DNS response.

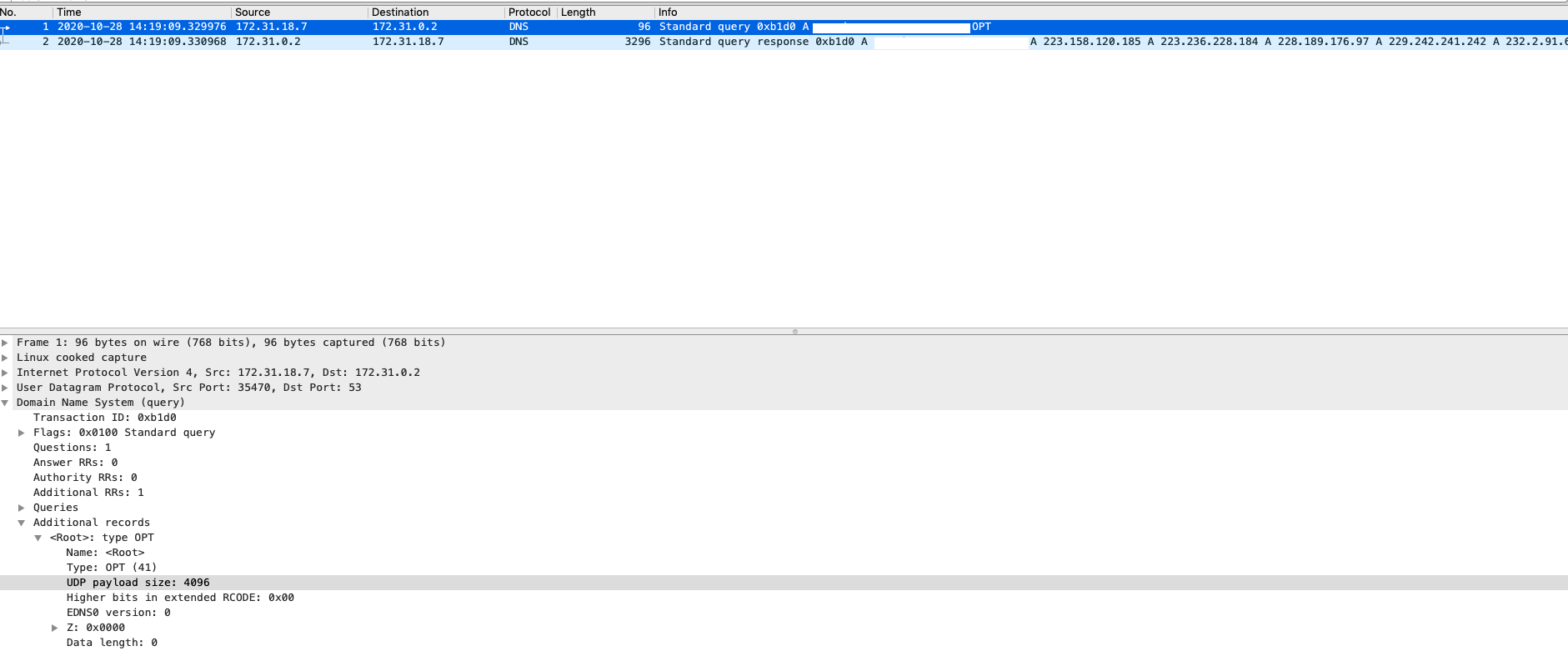

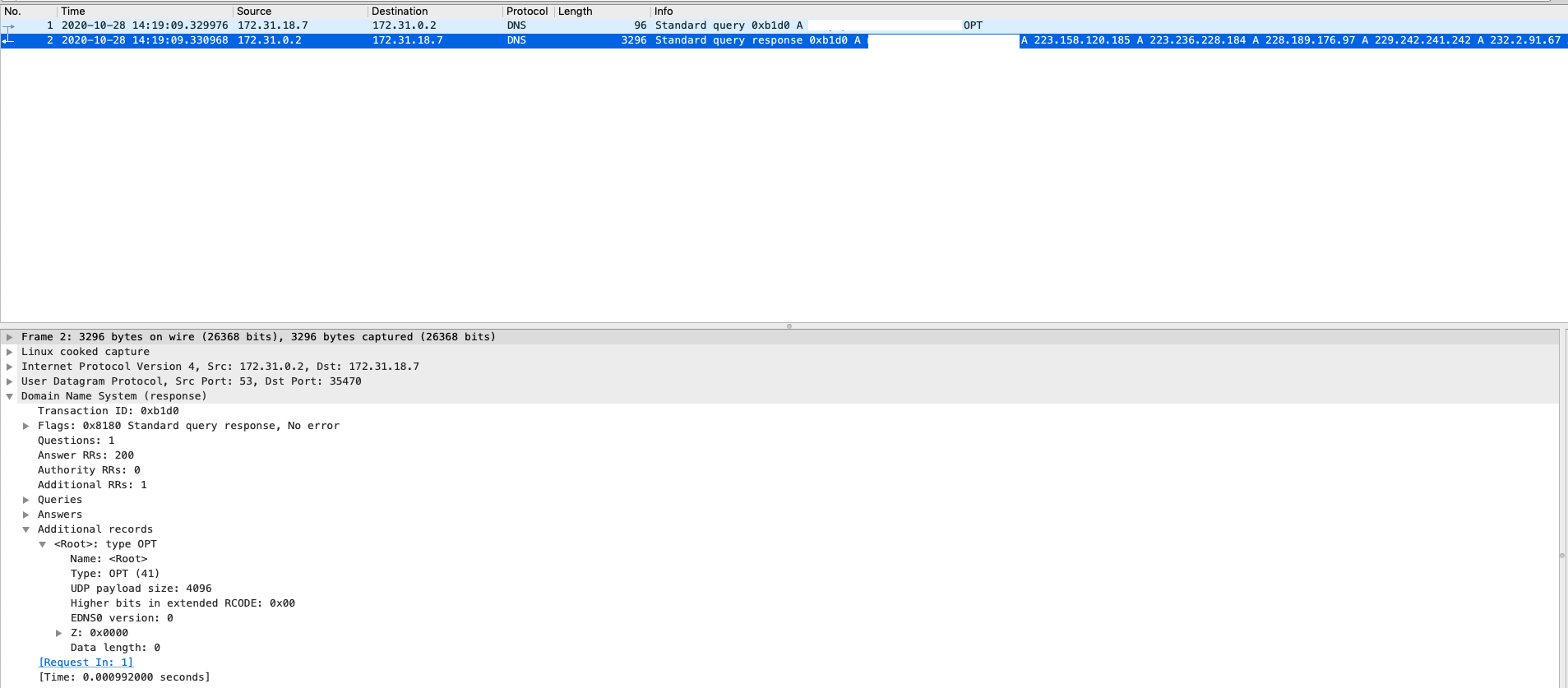

If you can expect the DNS response will have larger payload, basically, client still able to send additional EDNS information to increase the buffer size in single UDP response. This still able to ask CoreDNS to forward the additional section using EDNS0.

The workflow can be:

- 1) Resolve the domain by querying

CNAME - 2) Once get the mapping domain name, can send another

Arecord query withEDNSoption

(You can implement the logic in your own application. Because it has many different way to enable EDNS option, please refer to the documentation provided by your programming language or relevant library.)

Here is an example regarding the output can see when using dig

$ dig -t CNAME my-svc.default.cluster.local

... (Getting canonical name of the record, e.g. example.com) ...

$ dig example.com

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 51739

;; flags: qr rd ra; QUERY: 1, ANSWER: 22, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

; COOKIE: 7fb5776972bf2aa4 (echoed)

;; QUESTION SECTION:

; example.com. IN A

;; ANSWER SECTION:

example.com. 15 IN A 10.4.85.47

example.com. 15 IN A 10.4.83.252

example.com. 15 IN A 10.4.82.121

...

example.com. 15 IN A 10.4.82.186

In the output, we can see that, by default, the dig will add optional setting to increase allowed payload size in UDP query, in this case it was using 4096 bytes:

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

Below is a sample packet using dig with EDNS:

As the method generally need to implement in the application. If you would like to increase the default buffer size for every UDP quries sent by CoreDNS, you can use bufsize plugin to limits a requester’s UDP payload size.

Here is an example to enable limiting the buffer size of outgoing query to the resolver (172.31.0.10):

. {

bufsize 512

forward . 172.31.0.10

}

However, it would ask CoreDNS to allow larger payload size in every DNS queries, it may cause DNS vulnerabilities potentially with performance degraded. Please refer to the CoreDNS documentation to get more detail.

Solution 2: Having TCP retransmit when the DNS query was truncated (Recommended)

As mentioned, the normal behaviour of any DNS server needing to send a UDP response that would exceed the 512-byte limit is for the server to truncate the response so that it fits within that limit and then set the TC flag in the response header. When the client receives such a response, it takes the TC flag as an indication that it should retry over TCP instead.

So far, by analyzing the network flow with packets, we can certainly sure CoreDNS doesn’t help us to perform the retransmit over TCP if getting message truncation. So the question is, can we ask the CoreDNS should retry with TCP if getting message is truncated?

- And the answer is … YES!.

Since 1.6.2, CoreDNS should support syntax prefer_udp to handle the truncated responses when forwarding DNS queries:

The explanation of the option prefer_udp:

Try first using UDP even when the request comes in over TCP. If response is truncated (TC flag set in response) then do another attempt over TCP. In case if both force_tcp and prefer_udp options specified the force_tcp takes precedence.

Here is an example to add the option for forward plugin:

forward . /etc/resolv.conf {

prefer_udp

}

This option generally can be added in your configuration if you can aware a truncated response is received but CoreDNS doesn’t handle it. However, it is still recommended to get it a try and see any performance issue can cause. Consider scale out your CoreDNS Pods in your environment would be helpful if having large scale cluster.

Conclusion

In this article, it dive deep into an DNS resolution issue regarding CoreDNS when running workload on Amazon EKS and break down the detail by inspecting network packet. The root cause relates to the message was truncated due to the response payload size exceeds 512 bytes, which result in the client (Pod) was unable to get the correct result with IP addresses. The response payload will return detail with TC flag set up.

This issue generally related to the design of DNS in UDP and it is expected to get the message truncation if having larger payload. In addition, as mentioned in RFC#1123, the requester should have responsibility for handling the situation if aware that TC flag has been set up. This article was mentioning the detail and the flow according to packet analyzing.

To remedy the problem, this article also mentioned several methods can be applied on client side or CoreDNS, by using EDNS or apply TCP retransmit, both are able to be applied in CoreDNS.

References

Share on

Twitter Facebook LinkedInIs that useful? Let me know or buy me a coffee

一次性支持 (ECPay)

一次性支持 (ECPay)

Leave a comment